Imagine a vast library filled with millions of books. Instead of cataloguing every single page, you decide to summarise each book with a single keyword or code that best represents its content. This is the essence of Vector Quantisation (VQ) — simplifying complex data by representing large groups of similar items with a single representative value.

In the world of data science, this technique reduces storage requirements and computational complexity, much like turning pages into summaries. But behind this simplicity lies an elegant balance between precision and efficiency.

Understanding the Essence of Vector Quantisation

At its core, Vector Quantisation compresses data by dividing it into clusters, where each cluster is represented by a single vector called a codeword. Rather than storing every data point, only the codeword index is kept. This process reduces memory use but introduces a certain amount of loss — hence the term lossy compression.

Imagine taking a high-resolution photograph and storing it with fewer colours. The overall picture remains recognisable, but subtle details fade. Similarly, in VQ, the trade-off between accuracy and compactness is deliberate, designed to prioritise efficiency where perfect precision is not essential.

For learners seeking to understand how algorithms like VQ function in real-world contexts, enrolling in a data science course provides practical exposure. It helps bridge the gap between mathematical foundations and practical data compression methods used in industries like telecommunications and computer vision.

How Vector Quantisation Works

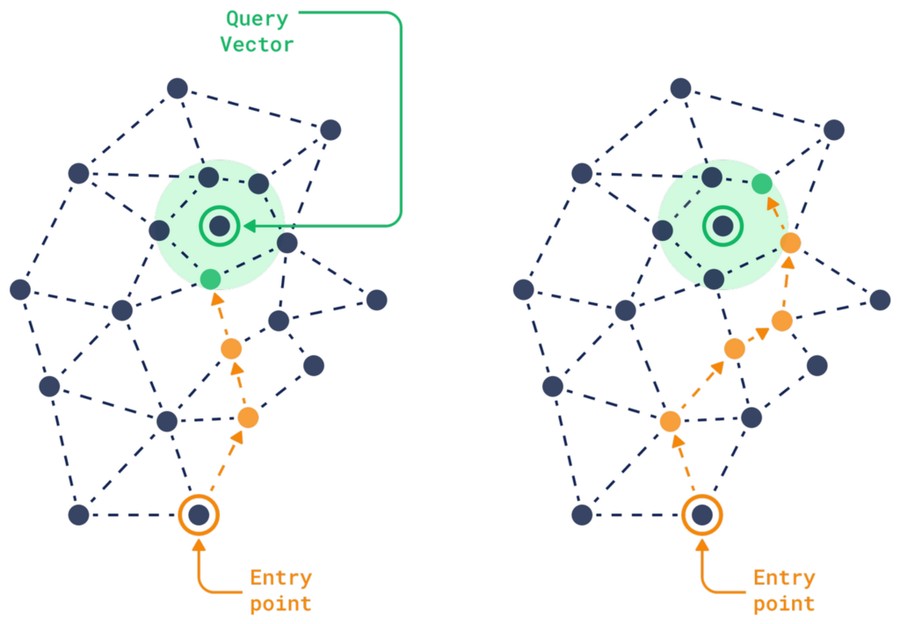

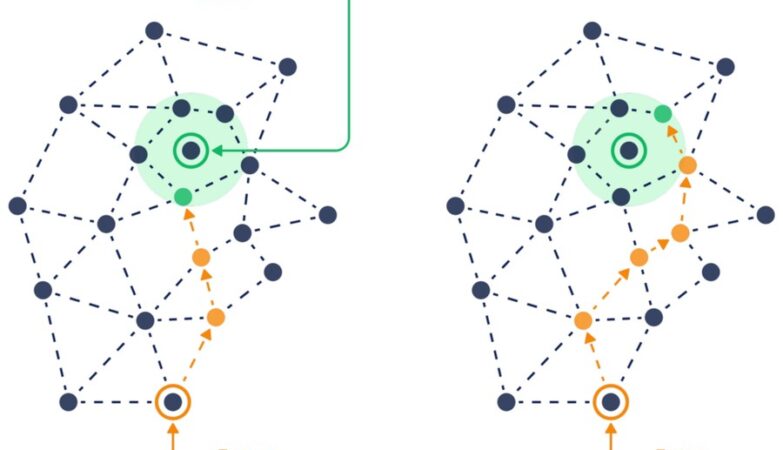

The process of VQ resembles training a team of interpreters. You feed the system a large dataset (like spoken words in different accents), and it learns how to group similar inputs together. Each group forms a cluster, represented by its centroid — the codeword.

During compression, every new piece of data is replaced by the closest codeword from the set. Decompression involves reconstructing the data using these representative vectors. While not identical to the original, the restored version maintains the key features needed for analysis or display.

In practice, VQ is commonly implemented using algorithms such as Lloyd’s Algorithm or K-Means clustering, which iteratively refine cluster boundaries until they represent data as efficiently as possible.

Applications Across Industries

Vector Quantisation may sound abstract, but its fingerprints are everywhere — from streaming videos to image recognition. In speech compression, VQ reduces audio data by storing representative frequency patterns. In image processing, it simplifies pixel data to optimise storage and transmission.

Even advanced neural networks, like VQ-VAEs (Vector Quantised Variational Autoencoders), rely on this technique to represent complex data distributions with discrete latent codes. This approach has revolutionised generative modelling by combining the precision of deep learning with the efficiency of quantisation.

Professionals exploring these applications through a data science course in Mumbai gain hands-on exposure to modern frameworks that leverage VQ concepts in compression, feature extraction, and clustering. Such understanding prepares them to design models that are both efficient and scalable.

Advantages and Limitations of VQ

The power of Vector Quantisation lies in its simplicity and versatility. It enables faster data transmission, reduces storage costs, and enhances computational efficiency. Because VQ groups data based on similarity, it’s especially effective in repetitive datasets, such as video frames or sensor readings.

However, this technique isn’t without drawbacks. Its lossy nature means that fine details are sacrificed, and designing an optimal codebook requires careful calibration. Too few clusters lead to poor quality, while too many reduce compression benefits. Balancing these parameters requires both mathematical understanding and experimental tuning.

Why Vector Quantisation Still Matters

In an age where deep learning dominates, it’s easy to overlook foundational techniques like VQ. Yet, even modern AI systems depend on efficient representation learning — the very principle VQ introduced decades ago.

Understanding methods like Vector Quantisation gives data scientists the ability to build lighter, faster, and more interpretable systems. It also reinforces an appreciation for how classic mathematical ideas continue to power cutting-edge technology.

Learners who master these concepts through structured programs like a data science course in Mumbai often discover that timeless principles form the backbone of modern innovations — from self-driving cars to intelligent compression algorithms.

Conclusion

Vector Quantisation is more than just a compression technique; it’s a reminder that simplicity, when executed intelligently, can achieve extraordinary results. By representing vast datasets through compact codes, VQ teaches the art of abstraction — finding meaning within complexity.

As data continues to grow in volume and diversity, professionals who understand these techniques will be invaluable. Enrolling in a data science course offers a clear pathway to mastering such methods, helping future analysts and engineers navigate the ever-evolving landscape of data-driven innovation.

Business Name: ExcelR- Data Science, Data Analytics, Business Analyst Course Training Mumbai

Address: Unit no. 302, 03rd Floor, Ashok Premises, Old Nagardas Rd, Nicolas Wadi Rd, Mogra Village, Gundavali Gaothan, Andheri E, Mumbai, Maharashtra 400069, Phone: 09108238354, Email: enquiry@excelr.com.